Intraclass correlation coefficient

| Command: | Statistics |

Description

The Intraclass Correlation Coefficient (ICC) is a measure of the reliability of measurements or ratings.

For the purpose of assessing inter-rater reliability and the ICC, two or preferably more raters rate a number of study subjects.

- A distinction is made between two study models: (1) each subject is rated by a different and random selection of a pool of raters, and (2) each subject is rated by the same raters.

- In the first model, the ICC is always a measure for Absolute agreement; in the second model a choice can be made between two types: Consistency when systematic differences between raters are irrelevant, and Absolute agreement, when systematic differences are relevant.

For example: the paired ratings (2,4), (4,6) and (6,8) are in perfect agreement, with a consistency of 1.0, but with an absolute agreement of 0.6667.

How to enter data

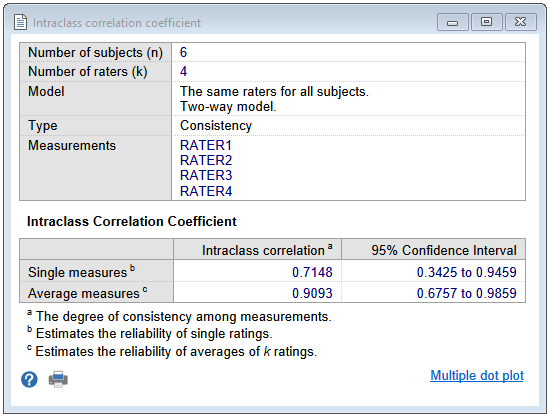

In this example (taken from Shrout PE & Fleiss JL, 1979) data are available for 4 raters on 6 subjects. The data for each subject are entered in the 4 columns.

If not all subjects are rated by the same 4 raters, the data are still entered in 4 columns, the order of which then being unimportant.

Required input

- Measurements: variables that contain the measurements of the different raters.

- Filter: an optional filter to include only a selected subgroup of cases.

- Options

- Model

- Raters for each subject were selected at random: the raters were not the same for all subjects, a random selection or raters rated each subject.

- The same raters for all subjects: all subjects were rated by the same raters.

- Type

- Consistency: systematic differences between raters are irrelevant.

- Absolute agreement: systematic differences are relevant

- Model

Results

The Intraclass correlation coefficient table reports two coefficients with their respective 95% Confidence Interval.

- Single measures: this ICC is an index for the reliability of the ratings for one, typical, single rater.

- Average measures: this ICC is an index for the reliability of different raters averaged together. This ICC is always higher than the Single measures ICC.

Literature

- McGraw KO, Wong SP (1996) Forming inferences about some intraclass correlation coefficients. Psychological Methods 1:30-46. (Correction: 1:390).

- Shrout PE, Fleiss JL (1979) Intraclass correlations: uses in assessing rater reliability. Psychological Bulletin 86:420-428.